Real-time strategy (RTS) games often feature a wide number unit types for the player to control. One of my favourite titles from the past, Westwood’s seminal Red Alert, had many classes of differently sized units: small infantry soldiers, medium-sized Jeeps and large tanks. In Red Alert 3, the most recent incarnation of the series, the diversity is increased even further by the introduction of units with terrain-specific movement capabilities; for example, new amphibious units can move across both ground and water areas. From a pathfinding perspective this introduces an interesting question: how can we efficiently search for valid routes for variable-sized agents in rich environments with many types of terrain?

This was the topic of a recent paper which I presented at CIG’08. In the talk I outlined Hierarchical Annotated A* (HAA*), a path planner which is able to efficiently address this problem by first analysing the terrain of a game map and then building a much smaller approximate representation that captures the essential topographical features of the original. In this article (the first in a series of two) I’d like to talk about the terrain analysis aspects of HAA* in some detail and outline how one can automatically extract topological information from a map in order to solve the variable-sized multi-terrain pathfinding problem. Next time, I’ll explain how HAA* is able to use this information to build space-efficient abstractions which allow an agent to very quickly find a high quality path through a static environment.

Clearance Values and the Brushfire Algorithm

Simply put, a clearance value is a distance-to-obstacle metric which is concerned with the amount of traversable space at a discrete point in the environment. Clearance values are useful because they allow us to quickly determine which areas of a map are traversable by an agent of some arbitrary size. The idea of measuring distances to obstacles in pathfinding applications is not a new one. The Brushfire algorithm for instance is particularly well known to robotics researchers (though for different reasons than those motivating this article). This simple method, which is applicable to grid worlds, proceeds as so:

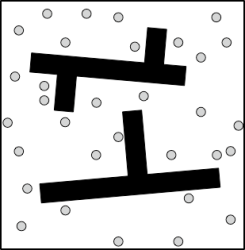

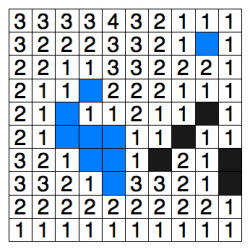

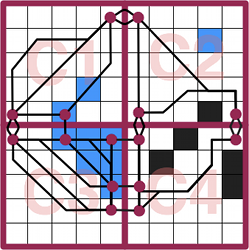

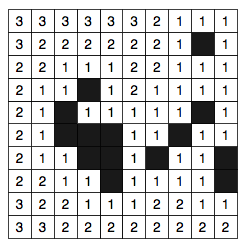

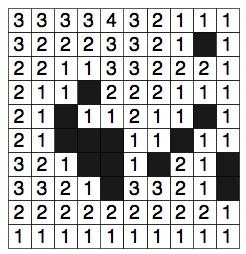

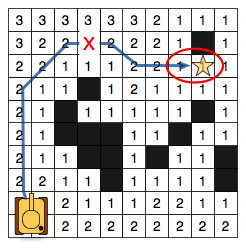

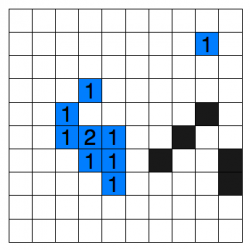

First, to each traversable tile immediately adjacent to a static obstacle in the environment (for example, a large rock or along the perimeter of a building) assign a value of 1. Next, each traversable neighbour of an assigned tile is itself assigned a value of 2 (unless a smaller value has already been assigned). The process continues in this fashion until all tiles have been considered; Figure 1 highlights this idea; here white tiles are traversable and black tiles represent obstacles (NB: The original algorithm actually assigns tiles adjacent to obstacles a value of 2; I use 1 here because it makes more sense for our purposes).

Figure 1. A small toy map annotated with values computed by the Brushfire algorithm. Black tiles are not traversable. |

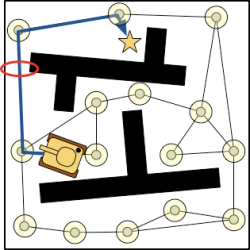

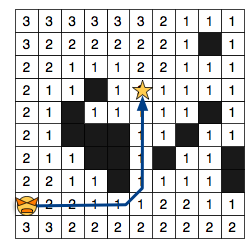

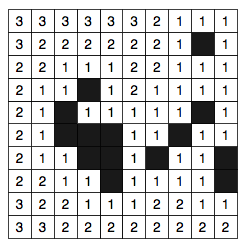

Figure 2. A 1x1 agent finds a path to the goal. All traversable tiles are included in the search space. |

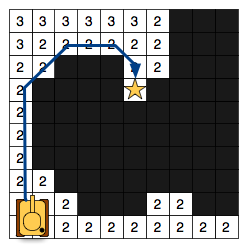

Figure 3. A 2x2 agent finds a path to the goal. Only tiles with clearance > 1 are considered. |

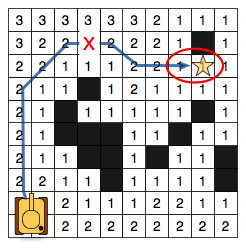

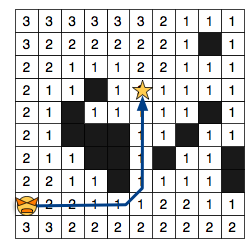

Figure 4. A 2x2 agent fails to find a path due to incorrect clearance values near the goal. Notice that the highlighted tiles are infact traversable by this agent.

Brushfire makes use of a minimum obstacle distance metric to compute clearances which works reasonably well in many situations. If we assume our agents (I use the term agent and unit interchangeably) are surrounded by a square bounding box we can immediately use the computed values to identify traversable locations from non-traversable ones by comparing the size of an agent with the corresponding clearance of particular tile. Figure 2 shows the search space for an agent of size 1×1; in this case, any tile with clearance equal to at least 1 is traversable. Similarly, in Figure 3 the search space is shown for an agent of size 2×2. Notice that the bigger agent can occupy much fewer locations on the map; only tiles with clearance values at least equal to 2. Because this approach is so simple it has seen widespread use, even in video games. At GDC 2007 Chris Jurney (from Relic Entertainment) described a pathfinding system for dealing with variable-sized agents in Company of Heroes — which happens to make use of a variant of the Brushfire algorithm.

Unfortunately, clearances computed using minimum obstacle distance do not accurately represent the amount of traversable space at each tile. Consider the example in Figure 4; Here our 2×2 agent incorrectly fails to find a path because all tiles in the bottleneck region are assigned clearance values less than 2. To overcome this limitation we will focus on an alternative obstacle-distance metric: true clearance.

The True Clearance Metric

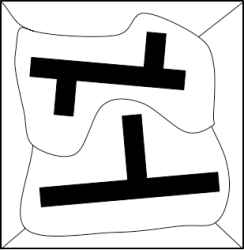

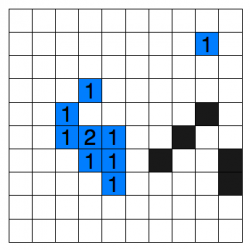

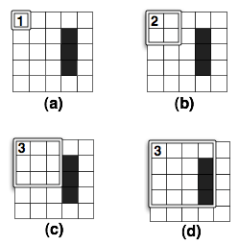

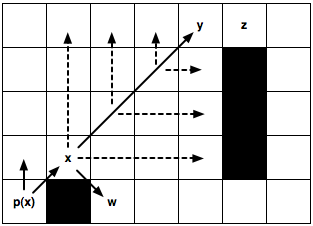

The process of measuring true clearance for a given map tile is very straightforward: Surround each tile with a clearance square (bounding box really) of size 1×1. If the tile is traversable, assign it an inital clearance of 1. Next, expand the square symmetrically down and to the right, incrementing the clearance value each time, until no further expansions are possible. An expansion is successful only if all tiles within the square are traversable. If the clearance square intersects with an obstacle or with the edge of the map the expansion fails and the algorithm selects another tile to process. The algorithm terminates when all tiles on the map have been considered. Figure 5 highlights the process and Figure 6 shows the result on our running example.

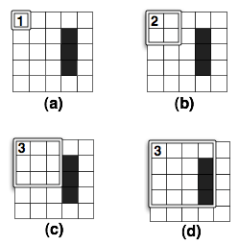

Figure 5. After selecting a tile (a) the square is expanded twice (b, c) before intersecting an obstacle (d).

Figure 6. The toymap from Figure 1, annotated with true clearance values.

Notice that by using true clearance the example from Figure 4 now succeeds in finding a solution. Infact, one can prove that using the true clearance metric it is always possible to find a solution for any agent size if a valid path exists to begin with (i.e. the method is complete; see my paper for the details).

Dealing With Multiple Terrain Types

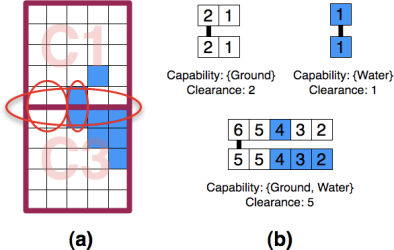

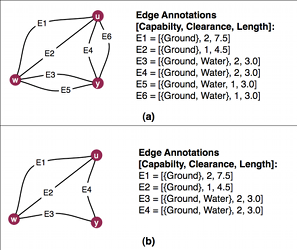

Until now the discussion has been limited to a single type of traversable terrain (white tiles). As it turns out however, it is relatively easy to apply any clearance metric to maps involving arbitrarily many terrain types. Given a map with n terrain types we begin by first identifying the set of possible terrain traversal capabilities an agent may possess. A capability is simply a disjunction of terrains used to specify where each agent can traverse. So, on a map with 2 terrains such as {Ground, Water} the corresponding list of all possible capabilities is given by a set of sets; in this case {{Ground}, {Water}, {Ground, Water}}. Note that, for simplicity, I assume the traveling speed across all terrains is constant (but this constraint is easily lifted).

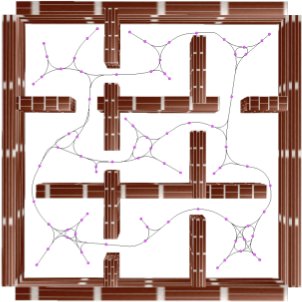

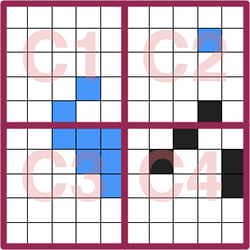

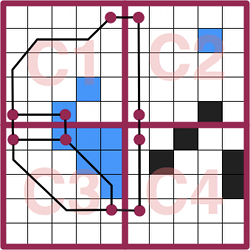

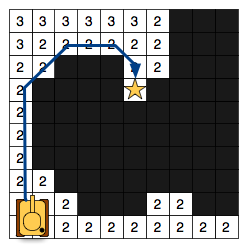

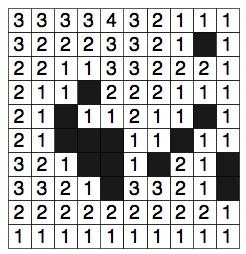

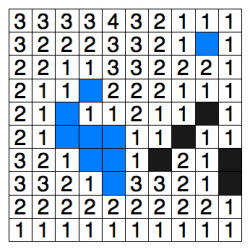

Next, we calculate and assign a clearance value to each tile for every capability. Figures 7-9 show the corresponding clearance values for each capability on our toy map; notice that we’ve converted some of the black (obstacle) tiles to blue to represent the Water terrain type (which some agents can traverse).

Figure 7. True clearance annotations for the {Ground} capability (only white tiles are traversable). |

Figure 8. True clearance annotations for the {Water} capability (only blue tiles are traversable). |

Figure 9. True clearance annotations for the {Ground, Water} capability (both white and blue tiles are traversable). |

Theoretically this means that, at most, each tile will store 2^(n-1) clearance value annotations (again, see the paper for details). I suspect this overhead can probably be improved with clever use of compression optimisations though I did not attempt more than a naive implementation. Alternatively, if memory is very limited (as is the case with many robot-related applications) one can simply compute true clearance on demand for each tile, thus trading off a little processing speed for more space.

Annotated A*

In order to actually find a path for an agent with arbitrary size and capability we can use the venerable A* algorithm, albeit with some minor modifications. First, we must pass two extra parameters to A*’s search function: the agent’s size and capability. Next, we augment the search function slightly so that before a tile is added to A*’s open list we first verify that it is infact traversable for the given size and capability; everything else remains the same. A tile is traversable only if its terrain type appears in the set of terrains that comprise the agent’s capability and if the corresponding clearance value is at least equal to the size of the agent. To illustrate these ideas I’ve put together a simplified pseudocode implementation of the algorithm, Annotated A*:

Function: getPath

Parameters: start, goal, size, capability

push start onto open list.

for each node on the open list

if current node is the goal, return path.

else,

for each neighbour of the newly opened node

if neighbour is on the closed list, skip it

else,

if neighbour is already on the open list, update weights

else,

if clearance(neighbour, capability) > size, push neighbour on the open list

else, skip neighbour

push current node on closed list

if openlist is null, return failure

So, that’s it! If you’re interested in playing with a working implementation of Annotated A* you can check out the source code I wrote to evaluate it. The code itself is written in C++ and based on the University of Alberta’s freely available pathfinding library, Hierarchical Open Graph (or HOG). HOG compiles on most platforms; I’ve personally tested it on both OSX and Linux and I’m told it works on Windows too. The classes of most interest are probably AnnotatedMapAbstraction, which deals with computing true clearance values for a map, and AnnotatedAStar which is the reference implementation of the search algorithm described here.

In the second part of this article I’m going to expand on the ideas presented here and explain how one can build an efficient hierarchical representation of an annotated map that requires much less memory to store and retains the completeness characteristics of the original. I’ll talk about why this is important and how one can use such a graph to answer pathfinding queries much faster than Annotated A*. At some point soon I also hope to discuss how one can use clearance-based pathfinding on continuous (non-grid) maps.

I noticed recently that the Proceedings for the 2008 IEEE Symposium on Computational Intelligence and Games (CIG’08) are now available

I noticed recently that the Proceedings for the 2008 IEEE Symposium on Computational Intelligence and Games (CIG’08) are now available